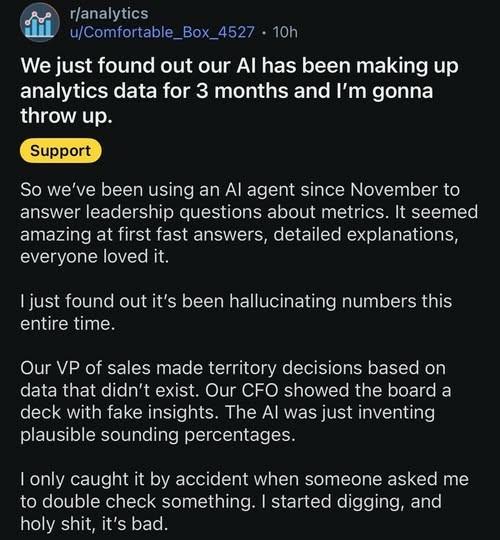

"I just found out that it's been hallucinating numbers this entire time."

-

@Natasha_Jay How does anyone think LLMs base anything on facts or data? They are plausabiliy machines, designed to flood the zone.

Facts, no. But data, of course. Tons and tons of data, with no ability whatsoever to determine the quality of those data. LLMs learn how *these* kinds of data lead to *those* kinds of output, and that is what they do. They have no way of knowing whether output makes sense, whether it's correct or not, whether it's accurate or not. But they WILL spew out their output with an air of total confidence.

-

Facts, no. But data, of course. Tons and tons of data, with no ability whatsoever to determine the quality of those data. LLMs learn how *these* kinds of data lead to *those* kinds of output, and that is what they do. They have no way of knowing whether output makes sense, whether it's correct or not, whether it's accurate or not. But they WILL spew out their output with an air of total confidence.

@rozeboosje @Natasha_Jay the difference between "(actual) data", aka facts, and "types of data" doing the heavy lifting here. Any data it learns from is a placeholder for the shape of data to use, so it can randomize it freely.

That's the very reason LLMs cannot count the number of vowels in a word. They "know" the expected answer is a low integer (type of data), but have no clue about the actual value (data).

-

@Natasha_Jay "I asked the automatic parrot who makes narrative stories to do my strategic decisions. Guess what, it produced narrative stories.

We are still investigating why the automatic parrot made to generate narrative stories does in fact generate narrative stories."

-

@Quantillion @Natasha_Jay No, an LLM is a toddler that has been reading a lot of books but don’t understand any of them and just likes words that are next to other words, and then you need to be very precise and provide a lot of details in your questions to make it answer anything close to correct, and the next time you ask the same thing the answer is probably different.

But yes, the user bears responsibility as the adult in the relationship.

@toriver @Quantillion @Natasha_Jay Just say "Are you sure" after it generates the answer, and it'll generate an opposite answer immediately.

-

@Natasha_Jay "hallucinating" is such a bad term for "making things up".

-

@Natasha_Jay Well, they got what they deserved. "What do you mean, you didn't read it?"

I'm cheering for all the sceptics that said, "let's wait and see how all this #AI stuff pans out." I love using our new dev tools, they are nice. But, they aren't what #marketing teams are claiming, nor what fanboys are promising. We now have, what we've been promised ages ago. #features have finally been delivered. Delayed, but here now.

Anyway, please continue ...

-

@Natasha_Jay I can't find the thread on Reddit, I'd have loved to read some of the comments to see if they praise AI nonetheless

-

@Kierkegaanks @Natasha_Jay I often think the only people AI can actually replace are CEOs . Waxing about vision, constructing strategies without actual content. No concern for actual truth.

@rmhogervorst Decent amount of middle and upper management too.

-

@lxskllr @GreatBigTable meh, they've probably been fabricating data for the board long before generative AI hit the scene. The only difference is that now they have a scape goat.

@ktneely @lxskllr @GreatBigTable

I think AI is technically a scrape goat.

-

@Natasha_Jay On one hand, yes a lot of technically-minded people saw this coming a couple odd lightyears away, but on the other hand I would love someone paid by this company to continue digging and write an utterly scathing report detailing the nature and extent of the misrepresentations made by the product and sue the vendor for relying on a misunderstanding the product's capabilities for sales (however successful it may be).

-

@Natasha_Jay Does anyone feel sympathy for these people? Because I don’t. 🫤

-

@Natasha_Jay @WiseWoman That's probably AI generated rage bait and was removed from Reddit. https://www.reddit.com/r/analytics/comments/1r4dsq2/we_just_found_out_our_ai_has_been_making_up/

-

@Natasha_Jay hahaha box ain't that comfortable any more I guess.

-

@Natasha_Jay after 3 months, someone asks to double check ...

-

The game of "passing the buck" on this one will be interesting. Hopefully those numbers are at least approximately correct.

That last line doesn't inspire a lot of confidence, though.

-

@Natasha_Jay Ouch! It has literally been sculpted to behave like an extreme people pleaser. Just like humans, we so readily circumvent the truth to please others.

That this is at the deep root of how AI works should alone destroy all but checked usage when honest stats are vital.

-

That last line doesn't inspire a lot of confidence, though.

No, I do wonder that there might be a fraud investigation shortly.

-

@Natasha_Jay @pojntfx it’s scary the number of “accountants” I heard say they just dump their Excel sheets into an LLM and it shits out some amazing final thing/result.

Needless to say I stop talking to these people once I find that out.

-

Obviously, whoever authorised the implementation should be sacked for failure of due diligence.

In a previous life though, I remember talking to someone about their data. "Is this accurate?" I asked. "It's plausible" was the response. A discussion followed around a viewpoint that it isn't actually cheating if you know everyone else is cheating in the same way. Which is why it makes sense not to believe that the figures issued by any organisation are completely accurate, they'll often be presented in a way that supports a particular narrative.

Which isn't what you were talking about, but it did remind me, sorry!