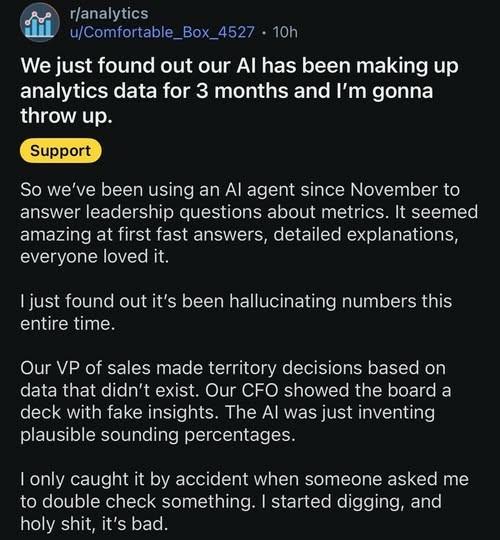

"I just found out that it's been hallucinating numbers this entire time."

-

@Natasha_Jay This really is a major issue with #AI.

Even if you use things like #Gemini "Deep Research", it still loves to make things up it couldn't research properly. For instance, I once tried to use it for researching axle ratios of cars and every time I've repeated the same request, it came up with different numbers.

(It can come up with decent results though for topics where lots of scientific papers are available, like life cycle emissions of vehicles with different propulsion types.)

-

@stragu @Natasha_Jay No idea.

@stragu @Natasha_Jay @drahardja someone says its AI generated, maybe that?

-

@taurus @Natasha_Jay Wait, "plausible sounding percentages" is not enough checking?

@Natasha_Jay @goedelchen uh no perhaps one should actually check

you know... the things a business does since... ever? Since the invention of business you are having an eye on the operation

-

@Natasha_Jay "Flounder, you can't spend your whole life worrying about your mistakes! You fucked ed up. You trusted us! Hey, make the best of it!"

-

@Natasha_Jay @goedelchen uh no perhaps one should actually check

you know... the things a business does since... ever? Since the invention of business you are having an eye on the operation

@Natasha_Jay @goedelchen ah I think I answered too seriouy

may I advice using a /s when saying things the average stupid person would write non-jokingly -

@Natasha_Jay

Well, AI for professionals & experts is a tool for the expert who is in charge and responsible.

The kind of use of AI described here is for *general public* AI, i.e. where the user has no idea how correct it is & shouldn't even have to care as long as it is reasonably plausible.

Professionals, experts & businesses can NEVER blame the "AI" for the hallucinations they take as truth.

@Quantillion @Natasha_Jay No, an LLM is a toddler that has been reading a lot of books but don’t understand any of them and just likes words that are next to other words, and then you need to be very precise and provide a lot of details in your questions to make it answer anything close to correct, and the next time you ask the same thing the answer is probably different.

But yes, the user bears responsibility as the adult in the relationship.

-

@Natasha_Jay Sounds plausible.

-

@Natasha_Jay

He he they are using randomization to make chat bot look more "intelligent"

See this:

https://towardsdatascience.com/llms-are-randomized-algorithms/ -

@Natasha_Jay Vibe work is not work.

-

@Epic_Null @Natasha_Jay That's bad, but honestly--switching to a new system without ever double checking anything?

Everyone involved should be fired, including the #AI

@davidr @Epic_Null @Natasha_Jay Fire the bloody management. They keep pushing for "use more AI". If you don't, you are considered to not be a team player, be obstructive, hinder the company and all these things.

-

@Epic_Null @Natasha_Jay @davidr yeah it's a system failure.

the failure is so bad you need to investigate how such a bad decision could have ever been made and you need to change your process@taurus @Epic_Null @Natasha_Jay @davidr but... But... That would lead right up to the board of directors and shareholders. These people are by definition faultless. The eminent purpose of a corporation is to extract wealth without consequences reaching this select group of shitheads.

-

I don't get it. How did people immediately trust AI as soon as our fascist techbro overlords ordered us to?

Most of our friends ask chat GPT for all their important life decisions now.

It takes extremely obvious fuckups like the Flock Superbowl ad to make people pause for a second. Usually, we gobble up whatever the oligarchs ram down our throats. -

@Kierkegaanks @Natasha_Jay

This proves AI can replace CFOs and CEOs!@Kierkegaanks @Natasha_Jay I often think the only people AI can actually replace are CEOs . Waxing about vision, constructing strategies without actual content. No concern for actual truth.

-

@Kierkegaanks @Natasha_Jay

This proves AI can replace CFOs and CEOs!@Nerde @Kierkegaanks @Natasha_Jay That are the easiest to replace people in most bigger companies.

-

@Natasha_Jay How does anyone think LLMs base anything on facts or data? They are plausabiliy machines, designed to flood the zone.

Facts, no. But data, of course. Tons and tons of data, with no ability whatsoever to determine the quality of those data. LLMs learn how *these* kinds of data lead to *those* kinds of output, and that is what they do. They have no way of knowing whether output makes sense, whether it's correct or not, whether it's accurate or not. But they WILL spew out their output with an air of total confidence.

-

Facts, no. But data, of course. Tons and tons of data, with no ability whatsoever to determine the quality of those data. LLMs learn how *these* kinds of data lead to *those* kinds of output, and that is what they do. They have no way of knowing whether output makes sense, whether it's correct or not, whether it's accurate or not. But they WILL spew out their output with an air of total confidence.

@rozeboosje @Natasha_Jay the difference between "(actual) data", aka facts, and "types of data" doing the heavy lifting here. Any data it learns from is a placeholder for the shape of data to use, so it can randomize it freely.

That's the very reason LLMs cannot count the number of vowels in a word. They "know" the expected answer is a low integer (type of data), but have no clue about the actual value (data).

-

@Natasha_Jay "I asked the automatic parrot who makes narrative stories to do my strategic decisions. Guess what, it produced narrative stories.

We are still investigating why the automatic parrot made to generate narrative stories does in fact generate narrative stories."

-

@Quantillion @Natasha_Jay No, an LLM is a toddler that has been reading a lot of books but don’t understand any of them and just likes words that are next to other words, and then you need to be very precise and provide a lot of details in your questions to make it answer anything close to correct, and the next time you ask the same thing the answer is probably different.

But yes, the user bears responsibility as the adult in the relationship.

@toriver @Quantillion @Natasha_Jay Just say "Are you sure" after it generates the answer, and it'll generate an opposite answer immediately.

-

@Natasha_Jay "hallucinating" is such a bad term for "making things up".